Project Setup#

There are two implementations of this project. One utilizes cloud storage, while the other relies on local storage.

Tip

Before settings up this project, install Python 3.8 or later. Support for Python 3.7 and earlier may be deprecated for some dependencies in this project.

Attention

This setup is for MAC OS only using Z shell (zsh). I will be providing setup instructions for Windows OS in a later update.

PostgreSQL Setup#

Installation#

Install PostgreSQL and start the database server. You can watch the MAC OS installation video to assist you with the installation.

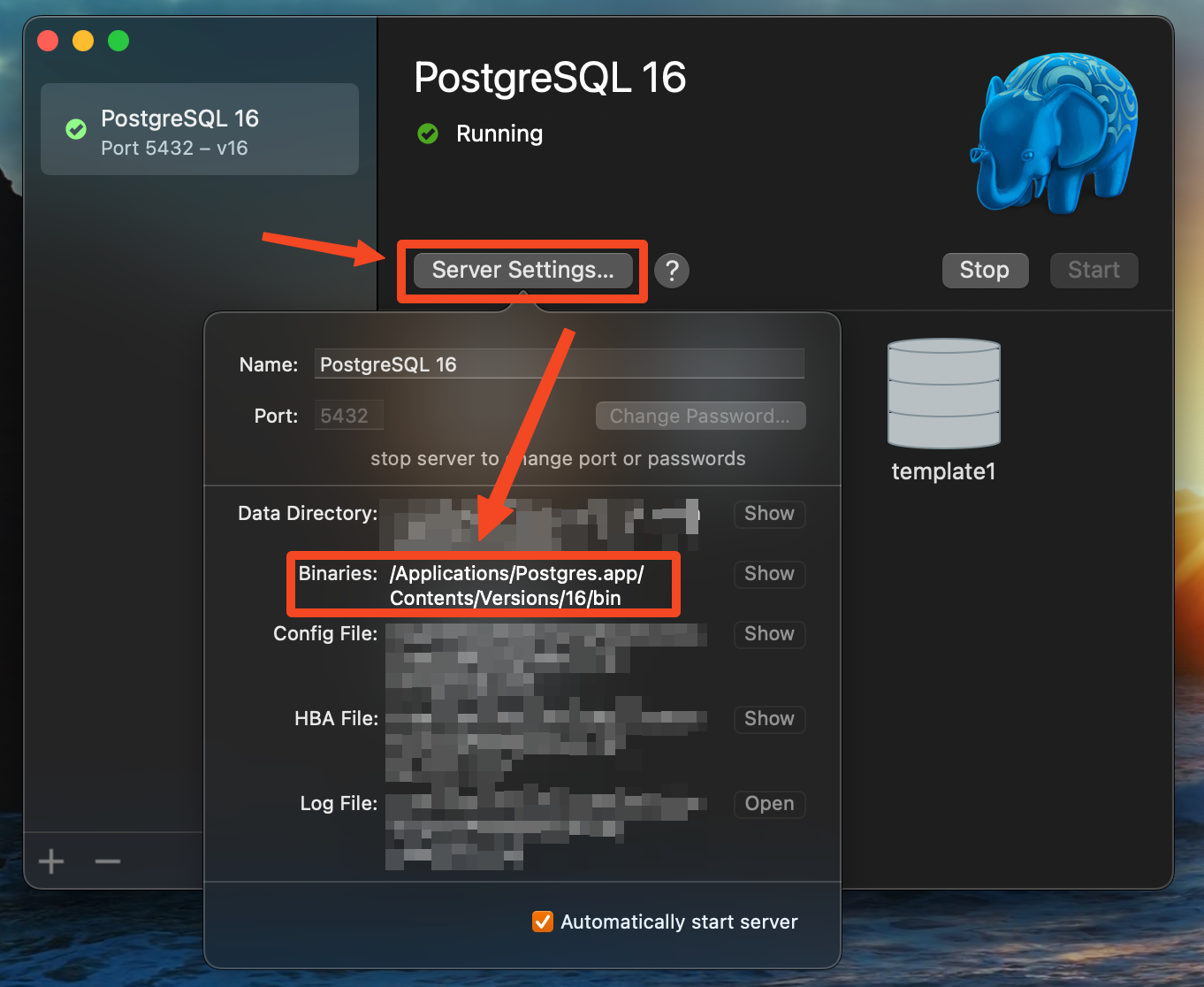

Configure PATHS File#

After installing PostgreSQL, you will need to complete the below steps so that the psql command will work in Terminal.

These steps will add the PostgreSQL binaries path to the paths file on your computer:

- Open

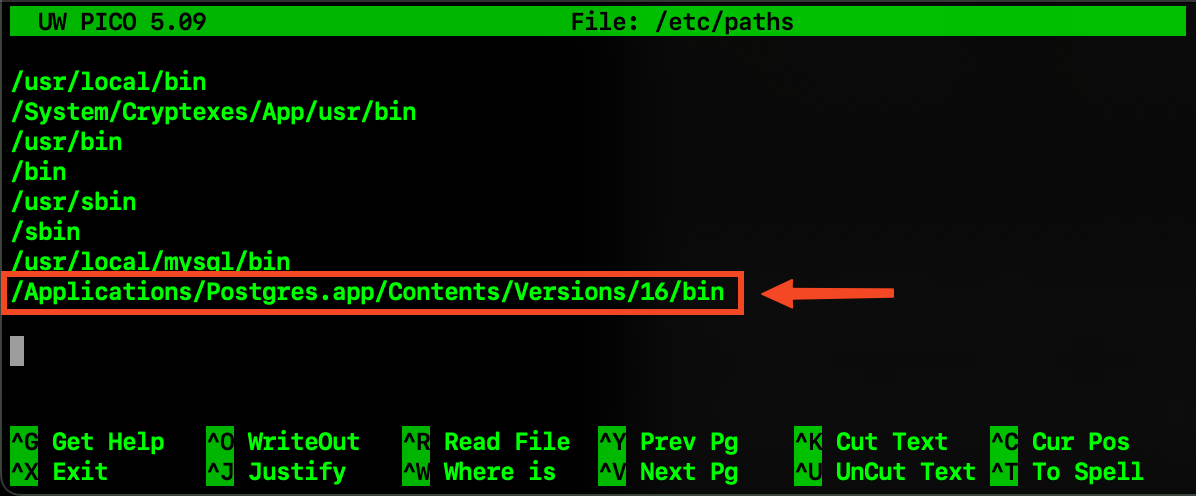

Terminaland typesudo nano /etc/paths, then press Enter to open thepathsfile.Note

You will be prompted to enter a password to edit the

pathsfile.Open the

PostgreSQLapp and make sure the server is runnning by clicking Start.Press

Control + O, then Enter to save the contents to thepathsfile.Lastly, press

Control + Xto exit thepathsfile.Close

Terminaland relaunch it.

Upon relaunching Terminal, you should find that you can now utilize the psql command.

Caution

If you do not complete the above steps, then you will get psql: command not found when trying to execute the psql command in Terminal.

Create Database#

Attention

The below setup is for the local implementation only. If you’re not using the local setup, please skip to Clone GitHub Repo.

We need to set a password for the local database connection which will be used later to connect dbt.

Enter the below in Terminal to launch the PostgreSQL command line interface (CLI):

psql -U postgres

To set the password, enter \password postgres. You’ll be prompted to create a password.

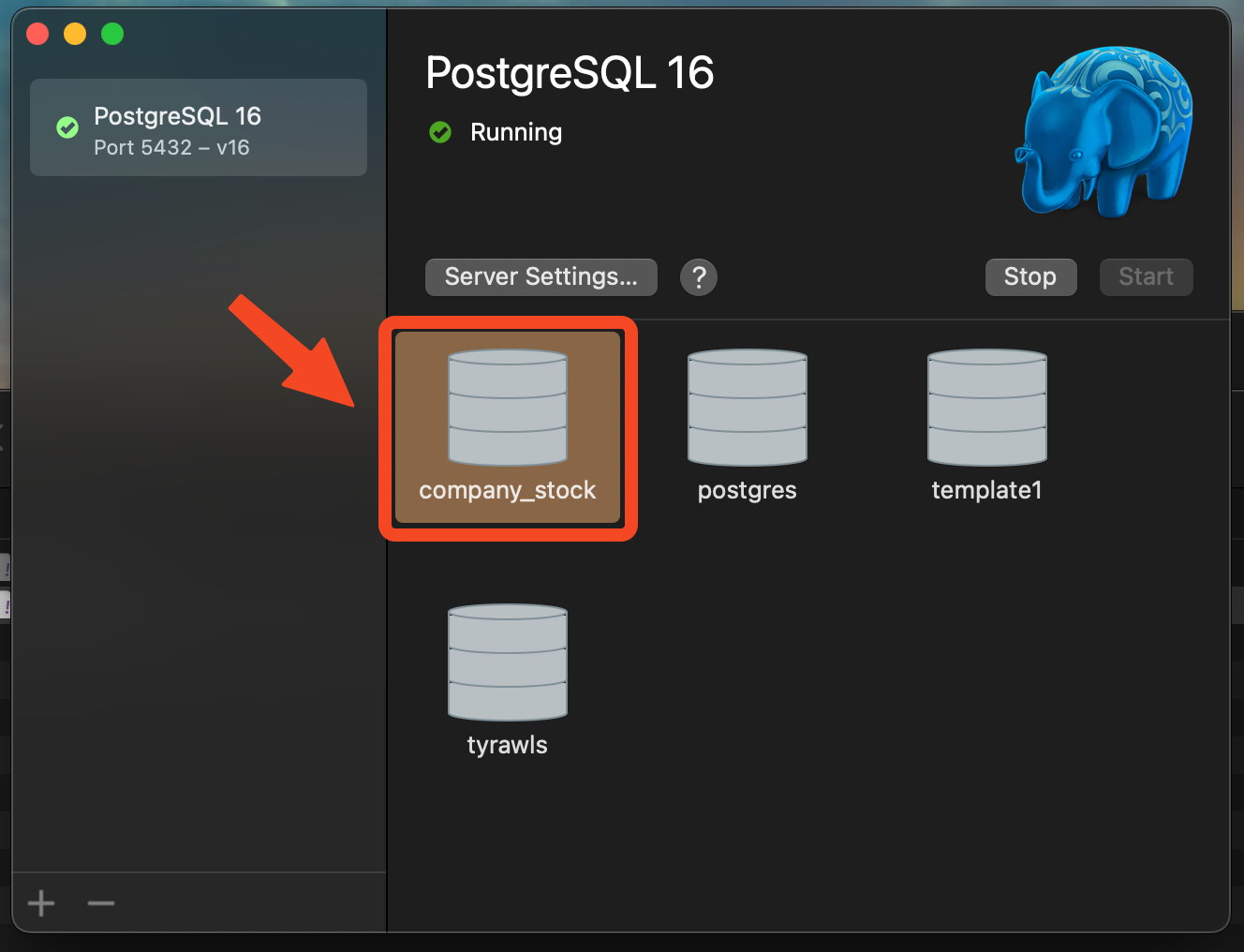

You must establish a database called company_stock to store the stock data.

Enter the below command in the PostgreSQL CLI:

CREATE DATABASE company_stock;

Upon successful creation, you should observe it within the PostgreSQL app. Open the PostgreSQL app to verify that the database was created

Enter \q in the PostgreSQL CLI to exit.

Clone GitHub Repo#

Open Terminal and navigate to a directory of your choice. Clone the GitHub repository by running the below command:

git clone https://github.com/tyrawls/portfolio-optimization.git

This will copy all the project files to your current directory.

Install Requirements#

Navigate to the cloud or local storage directory in Terminal after you have cloned the GitHub repository:

cd portfolio-optimization/cloud-storage # directory for cloud setup

cd portfolio-optimization/local-storage # directory for local setup

Note

You only need to choose one directory. The local directory is more simple, but the cloud directory requires more setup. To configure the cloud setup, you’ll be required to establish three components within Amazon Web Services (AWS).

Amazon S3 storage for staging data

Amazon Lambda to trigger data transfer to the database

Amazon RDS for

PostgreSQLdatabase storage

Create a Python virtual environment and activate it:

python -m venv .venv

source .venv/bin/activate

You should now be in your virtual environment (.venv).

Example

(.venv) (base) rootuser@hostname local-storage %

Upgrade the pip version:

pip install --upgrade pip

Install the dependencies into the Python virtual environment:

pip install -r requirements.txt

AWS Configurations#

Attention

The below setup is for the cloud implementation only. If you’re not using the cloud setup, please skip to dbt Setup.

S3 Bucket#

You will need to create a S3 bucket to stage your data before it goes to the database.

To gain access to read and write data to the S3 bucket from your device, you must acquire your AWS Access Key ID and AWS Secret Access Key.

These credentials can be obtained from your AWS account within the

Security Credentials section.

Once you’ve obtained your AWS keys, you will need to configure them by executing the below in Terminal to access the AWS CLI:

aws configure

You will be prompted to enter the below:

AWS Access Key IDAWS Secret Access KeyDefault region name(optional)Default output format(optional)

Once you’ve set your AWS keys, you may view your credentials by entering the below in a new Terminal window:

cd ~ && cd .aws && nano credentials

Finally, you’ll need to modify the bucket variable in the utils.py file located in the cloud-storage folder,

specifically on line 244, with the name of your S3 bucket. If you cannot view the line numbers, then you can locate the

bucket variable inside the get_historical_stock_data() function.

Lambda#

When data is stored into the S3 bucket, a PutObject event occurs. This event can be used as a trigger to transfer data from S3 to RDS (PostgreSQL).

You will need to complete the following:

Create a Lambda Function with a Python 3.8 runtime

Create a Trigger and select the S3 bucket you created as the Source

Set the Trigger Event Type to

PUTand click Add to create the triggerFrom the Lambda Function, click on the Upload From button and upload the

lambda_function.zipfile located inportfolio-optimization/cloud-storage/aws-lambda-package/zip-filesCreate a Lambda Layer with a Python 3.8 runtime and upload the

python.zipfile located inportfolio-optimization/cloud-storage/aws-lambda-package/zip-filesClick on Configuration > General configuration and set the Timeout to 30 secs.

Click on Configuration > Environment variables and create Environment Variables for your RDS (

PostgreSQL) connection. You will need to set theKeyandValuewith your database credentials.

Key |

Value |

|---|---|

DBNAME |

company_stock |

USER |

|

PASS |

|

HOST |

|

PORT |

5432 |

dbt Setup#

In order to conduct data transformations within the database, we must configure dbt to run the data models for execution.

Installation#

Install the dbt-postgres adapter version used for this project:

python -m pip install dbt-postgres==1.7.0

After the installation, check the dbt version:

dbt --version

You should see:

Core:

- installed: 1.7.0

Plugins:

- postgres: 1.7.0

Note

You may see an available update, but this can be ignored. Just make sure that the dbt-core

version matches the dbt-postgres version.

Initialization#

While in the local-storage or cloud-storage folder, switch to the dbt project folder and initialize the project:

cd portfolio_optimization_project_dbt && dbt init

You will be prompted to select a database by entering a number. Enter the number for the Postgres database and press enter. You should see the something like the below:

Running with dbt=1.7.0

[ConfigFolderDirectory]: Unable to parse dict {'dir': PosixPath('/Users/rootuser/.dbt')}

Creating dbt configuration folder at

Setting up your profile.

Which database would you like to use?

[1] postgres

Enter a number: 1

Profile portfolio_optimization_project_dbt written to /Users/rootuser/.dbt/profiles.yml using targets sample

configuration. Once updated, you will be able to start developing with dbt.

This will the create the profiles.yml file to add your database credentials.

Open a separate Terminal window. Copy and paste the below:

cd ~ # switch to root directory

cd .dbt # switch to .dbt folder

nano profiles.yml # open yml file for editing

Edit the profiles.yml file to look like the below:

portfolio_optimization_project_dbt:

outputs:

dev:

type: postgres

threads: 1

host: [host]

port: 5432

user: [dev_username]

pass: [dev_password]

dbname: company_stock

schema: public

prod:

type: postgres

threads: 1

host: [host]

port: [port]

user: [prod_username]

pass: [prod_password]

dbname: [dbname]

schema: [prod_schema]

target: dev

You will need to modify the following inputs:

Note

The brackets will need to be removed for each input and the password would need to be in single quotes.

host: If you are configuring this locally, then assign this value to

localhost. If you’re using the cloud setup then you will need to enter the AWS RDS endpoint you created.dev_username: If you are configuring this locally, then assign this value to

postgres. If you’re using the cloud setup then you will need to enter the AWS RDS username you created.dev_password: If you are configuring this locally, then assign this value to the password you created in the PostgreSQL Setup. If you’re using the cloud setup, then you will need to enter the AWS RDS username you created.

To save the profiles.yml content:

Press

Control + O, then Enter to write to theprofiles.ymlfile.Lastly, press

Control + Xto exit theprofiles.ymlfile.Close this

Terminal

Go back to the initial Terminal and test the database connection:

dbt debug

If done correctly, the output will show “All checks passed!”. If not, you will need to verify that the profiles.yml file has the correct info.

Source File Configuration#

To integrate your database credentials and Financial Marketing Prep (FMP) API key, you’ll need to create a source file to add the information. If you haven’t done so already, sign up for the API to obtain a FREE key.

Example

FMP_API_KEY=”?apikey=257u72xb87f2953y557example407n41”

To cretae a source file named .portoptrc, open a separate Terminal and execute the below:

cd ~ && touch .portoptrc && nano .portoptrc

Cloud Setup#

For cloud setup, add the below to the .portoptrc file:

# AWS RDS (``PostgreSQL``) credentials

export CLOUD_HOST=[HOST]

export CLOUD_PORT="5432"

export CLOUD_USER=[USERNAME]

export CLOUD_DBNAME="company_stock"

export CLOUD_PASS=[PASSWORD]

# Financial Marketing Prep (FMP) API URL and key

export FMP_API_URL="https://financialmodelingprep.com/api/v3/profile/"

export FMP_API_KEY=[APIKEY]

You will need to add your credentials to the inputs in the brackets.

Press

Control + O, then Enter to write to the.portoptrcfile.Lastly, press

Control + Xto exit the.portoptrcfile.Close this

Terminal

Local Setup#

For local setup, add the below to the .portoptrc file:

# Local (``PostgreSQL``) credentials

export LOCAL_HOST="localhost"

export LOCAL_PORT="5432"

export LOCAL_USER="postgres"

export LOCAL_DBNAME="company_stock"

export LOCAL_PASS=[PASSWORD]

# Financial Marketing Prep (FMP) API URL and key

export FMP_API_URL="https://financialmodelingprep.com/api/v3/profile/"

export FMP_API_KEY=[APIKEY]

You will need to add your credentials to the inputs in the brackets.

Press

Control + O, then Enter to write to the.portoptrcfile.Lastly, press

Control + Xto exit the.portoptrcfile.Close this

Terminal